BUILDlab, LLC

Apidae is a cloud-based computational engine specifically written with the needs of high-performance simulation of energy models of buildings in mind. Users can interact with Apidae by using a regular browser (no additional software needs to be installed) or by using a powerful application programming interface (API). It allows users to run thousands of simulations concurrently and we implemented special statistical algorithms to evaluate models and search algorithms to find models with specific characteristics.

Core team member responsible for all project aspects including: designing the system, creating install scripts for the cloud instances and maintaining the development virtual machines (VM), creating database schemas and queries for metadata and high-level results, processing of simulation results and visualizing them, and managing sensor / weather data.

Rochester Institute of Technology

Automatic Projector Calibration

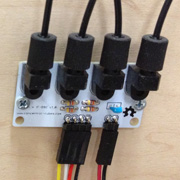

This is a reimplementation of Johny Lee's Automatic Projector Calibration in collaboration with James A. Ferwerda. To make it easier for students to work on this project a sensor board with four IF-D92 was developed that connects to a regular Arduino. Code examples for the Arduino microcontroller and for Processing were also provided.

Cornell University - Program of Computer Graphics

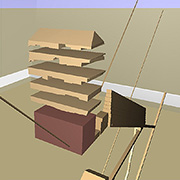

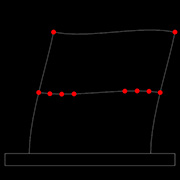

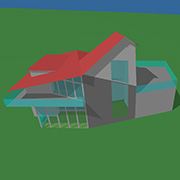

The Sustain project is a programming sandbox that allows the user to interact with data relevant to building simulation. It provides easy methods to import building data and access site information (terrain, weather, sun position, etc.) It can execute a variety of simulators, gather the results, and visualize the data.

I was a core team member responsible for all project aspects including: scheduling and running simulations on a cluster, collect simulation results, visualize results, importing data from modeling software, accessing weather data, visualize input data (sun and weather), programming the interface, ...

As part of the User Interface Development Project I built a table sized multi-touch interface. I did the research, the design, built all of the the electrical and helped with most of the mechanical setup, and I setup all of the software. I am experimenting with multi-touch technology and user interfaces.

ITI VisionMaker PS Digital Drafting Table

Cornell's Program of Computer Graphics has two of these great drafting tables, which are perfect for designers and architects. Unfortunately the driving computer was outdated and we did not have any drivers for more recent operating systems. As part of the User Interface Development Project, I debugged the serial output and reverse engineered most of the output protocol. I helped a student to write a program that simulates mouse event based on the interactions with the table.

Converting Cornell Cinema to a 3D Movie Theater

When Phil “Captain 3-D” McNally (DreamWorks) planed to come to Cornell's campus he asked a simple question: “Can we show our stereo content in 3D?”. So, we converted Cornell Cinema to a 3D Movie Theater.

University of Michigan - 3D Lab

The University of Michigan VR Lab created the world's first functional virtual football trainer. We also invented a way of creating a play animation automatically from a play chart (patent 20030227453 pending).

NEES Project - funded by National Science Foundation (NSF)

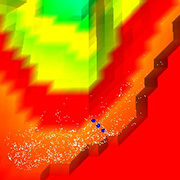

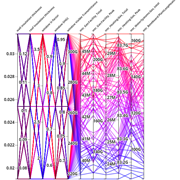

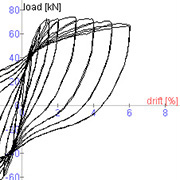

I created the Web DataViewer, which allows the user the look at data sets collected in earthquake experiments (e.g., hundreds of sensors collecting data thousands of times per second, over periods of time ranging from a few minutes to several hours). I integrated the DataViewer in the web-based collaboration environment using the CHEF framework. I also conducted the user requirement study.

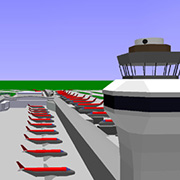

The University of Michigan VR Lab collaborated with Northwest Airlines in planning of the Midfield Terminal Extension. We created VRML models for web collaboration and planning. I created a web-based application for remote presentation, created CAVE model for on site presentation, assisted with the conversion of the geometric model, and served as acting team lead.

Medical Readiness Trainer (MRT)

The University of Michigan VR Lab created a training simulator for emergency personnel. We overlaid a physical human patient simulator with a virtual world. I created the interactive 3D user interface and added stress factors to the virtual simulation.

Virtual Disaster Simulator - funded by the Centers for Disease Control (CDC)

The University of Michigan 3D Lab created a triage training simulator for emergency room and first responder personnel. I wrote a program that allows to sketch out the scene in 2D (location of props and people over time) and generates 3D models and animation files for the CAVE. I conducted many human subject tests and analyzed the results.

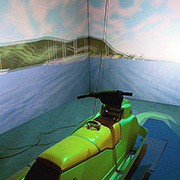

Virtual Jet Ski Driving Simulator

The University of Michigan VR Lab created a fully functional jet ski simulator for the U.S. Coast Guard.

I integrated sensors acquisition with the physical simulation system and helped to write the underlying core system. I also conducted many human subject tests and statistically analyzed the results.

I consulted with Sandy Arlinghaus (University of Michigan faculty, School of Natural Resources & Environment) with Google Earth and SketchUp problems. I taught classes on creation and clean up of textures for 3D models. I also helped with the Google Earth's competition “Build your own Campus”.

Google Earth

I create georeferenced data sets for Google Earth. I also created a tutorial and taught students the usage. I integrated the University of Michigan Magic Bus (mbus) to Google Earth (which was published in SOLSTICE: An Electronic Journal of Geography and Mathematics).

3D Uterus Animation

I created 3D visualization of simulation results involving uterine activity in human birth process for Mel Barclay, MD. (University of Michigan faculty, Medical School).

WaterNow

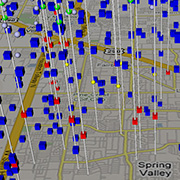

I created 3D visualization of water quality in VRML and Google Earth.

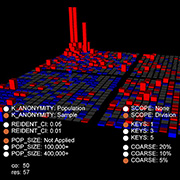

Artificial Geographies

I created 3D visualization of simulation output of disclosure risk in public-use data sets for Kristine Witkowski (University of Michigan, research staff, ICPSR).

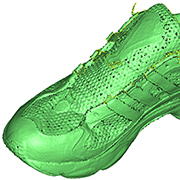

Handyscan3D

I scanned physical objects and cleaned up the data. This page explains the clean up process.

Modeling a Head

I did a study of different methods to create 3D geometry. It analyzes different capturing and clean up techniques.

The University of Michigan 3D Lab assisted Professor Dan Fisher (University of Michigan) in the creation of a full 3D model of a mastodon skeleton. I created reference material for 3D model and helped to clean up data for geometric model suitable for real-time applications.

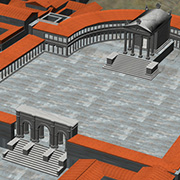

The University of Michigan 3D Lab worked with the Kelsey Museum on a real-time model of Antioch for the CAVE. I assisted the students with technical problems and helped to integrated the model in the CAVE.

I studied the usefulness of VRML in scientific visualization, education, and art. I wrote conversion programs to visualize data, created virtual tours, and scripted interfaces.

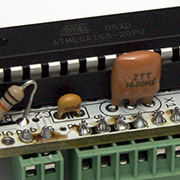

Microcontrollers (like Arduino)

I work with microcontrollers (like Arduino) to connect various sensors and input devices to real-time applications.

3D video broadcasting

I evaluated the video broadcasting of stereo content and did test runs at remote shows.

3D video / 3D audio recording

I helped with 3D video and 3D audio recording events. We made a custom Rig3D. I helped with the post production of the material.

GeoWall for Detroit Science Center

I volunteered to design and integrate the new stereo projection system for the Detroit Science Center.

I consulted with the specifications of the hardware, I consulted with the physical layout, I created the electronic interface, and I programmed a stereo video player.

University “Otto von Guericke” Magdeburg (Germany)

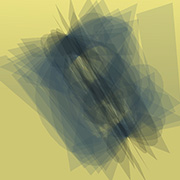

My Master Thesis: “A parametric model for the rendering of lines”

I created on a new library to support the non-photorealistic rendering research at the University “Otto von Guericke” Magdeburg, Germany. Here are some examples (partially in German ![]() ).

).