Input Devices

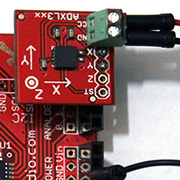

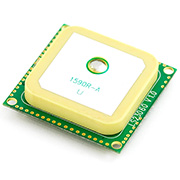

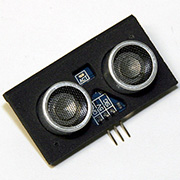

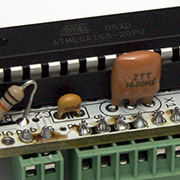

I work with microcontrollers (Arduino, EZIO board, etc.) to connect various sensors and input devices to real-time applications, for example for the Jetski project.

Design and build new input devices like Multi-touch Interface.

Create wired and wireless sensor networks to log data (like WM-918 weather station, temperature, relative humidity, pressure, ambient light, and heart rate), store data in databases, make data accessible on web servers. Also connect sensors to use for real-time applications (using Sockets, OSC, or VRPN).

Immersive Environments (Display Technologies)

CAVE

I experimented with University of Michigan 3D Lab's CAVE for many years. I was interested in improving the human computer interaction in realistic immersive environments. I programed the CAVE, created and converted content, implemented projects, and worked with ImmersionGraphics on upgrades and maintenance.

I created with the help of several students programming libraries to make the CAVE usage easier.

The University of Michigan 3D Lab's CAVE has four walls (1024x1024 each) and a Vicon motion capture system.

Wide-Screen Projection System (AccessGrid)

I experimented with tiled projection system. I started with the AccessGrid which was designed for video conferencing. It used 3×1 projectors (3072x768) which allowed for the presentation of multiple data sets and video streams. I helped to build the system and operated it for many conferences. I worked with Internet2 for the special network requirements.

After upgrades I experimented with the system to use it for demonstration and exploration of 3D data in real-time.

Stereo Projection System (GeoWall)

I helped setting up the first couple GeoWalls in Michigan (University of Michigan: Geological Sciences).

I was crucial in designing six more systems (University of Michigan 3D Lab, University of Michigan: Department of Emergency Medicine , University of Michigan: Architecture, University of Michigan: ERC/RMS, Detroit Science Center, and Cornell University: Cinema).

High-resolution Tiled Display (GeoWall2)

At the University of Michigan 3D Lab I set up a small 7.5 megapixels system with 3×2 screens (3840×2048) driven by just one computer. I also built a big 35 megapixels system with 4×4 screens (7680×4800) driven by eight display computers and one control computer.

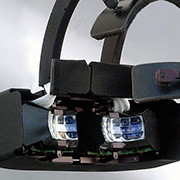

I used several head-mounted display, primarily the Kaiser HMD which I used for a “Presidential Initiatives” funded project called “Augmented Reality for Hazard Detection”. Our team connected a Flock of Birds tracking device and programmed the display with Performer using an Onyx. I also demonstrated the Boom technology and wrote programs for it with the Performer toolkit. I evaluated the Sensic's tiled display.

Scanners and Tracking Devices

I used several 3D scanners, primarily the Handyscan 3D. I configured the software, operated the device, and cleaned up the geometric model.

I worked with the University of Michigan 3D Lab's Vicon System which uses 8×MX13 cameras. I calibrated the system, set up markers, and recorded motion. I also worked on the integration as a tracking system in a CAVE environment.

Tracking Devices

I experimented with Ascension's Flock of Birds and Polhemus' FastTrak. I wrote software to talk directly with the box over the serial protocol. I also integrated the trackers to various real-time software toolkits using client/server application like trackd and VRPN.

I used several sensors connected to microcontrollers to measure the tilt, location, distance of objects or track points.

Rapid Prototyping

I worked with several rapid prototyping machines, primarily with Z Corp's 3D printer product line (Z310 and Z510). This involved geometric model clean up, machine preparation, clean up of the printed models, and finishing the models with epoxy or cyanoacrylate (CA). I also worked with Dimension's Elite machine, which uses fused deposition modeling (FDM) based on plastic.